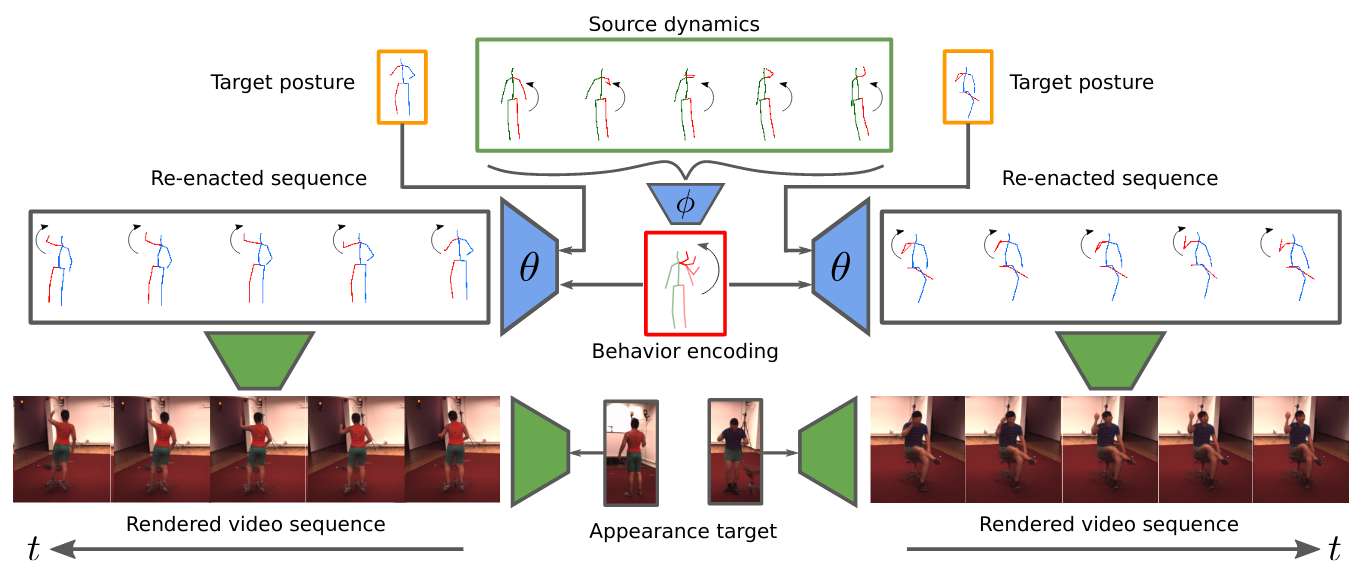

Andreas Blattmann, Timo Milbich, Michael Dorkenwald, Björn Ommer

ICCV 2021

|

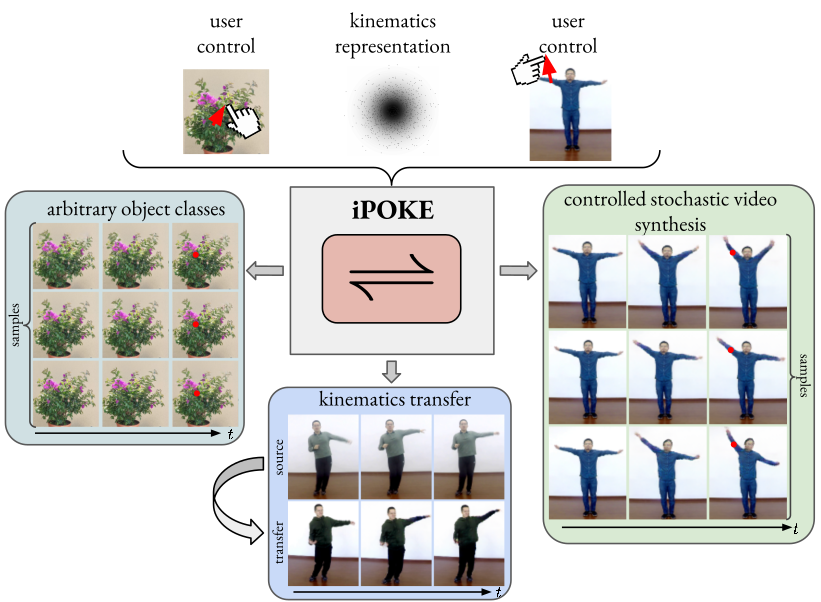

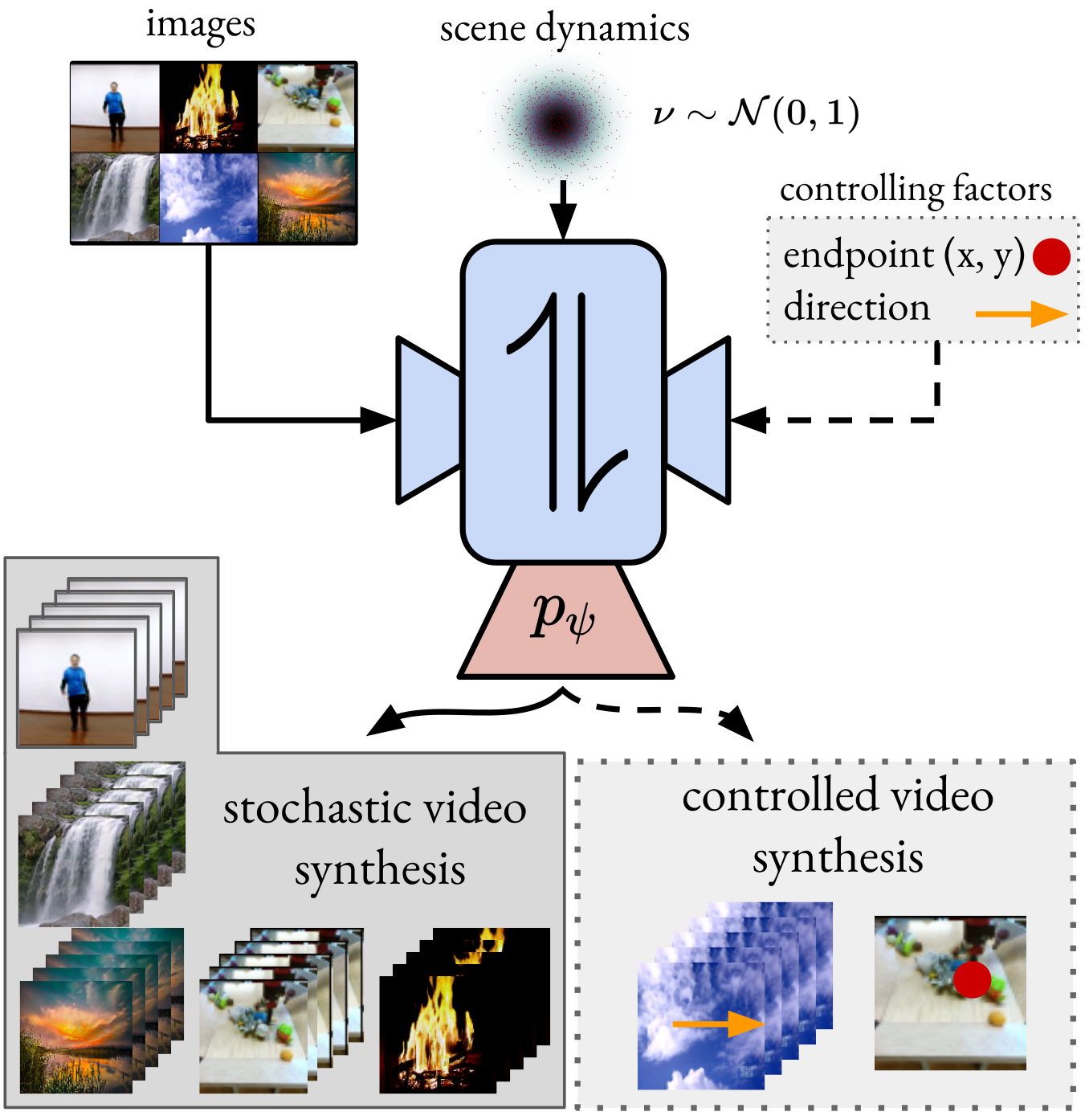

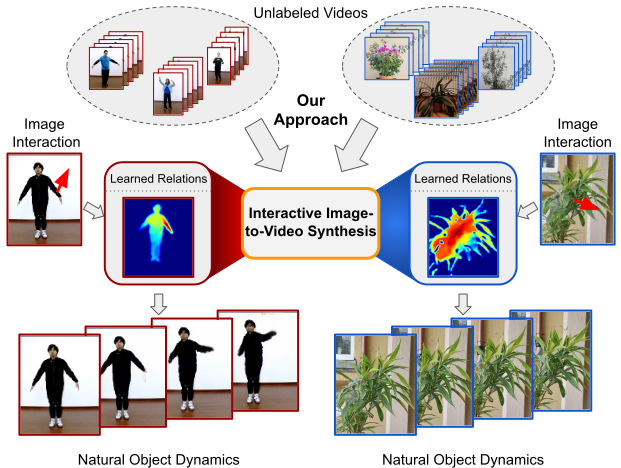

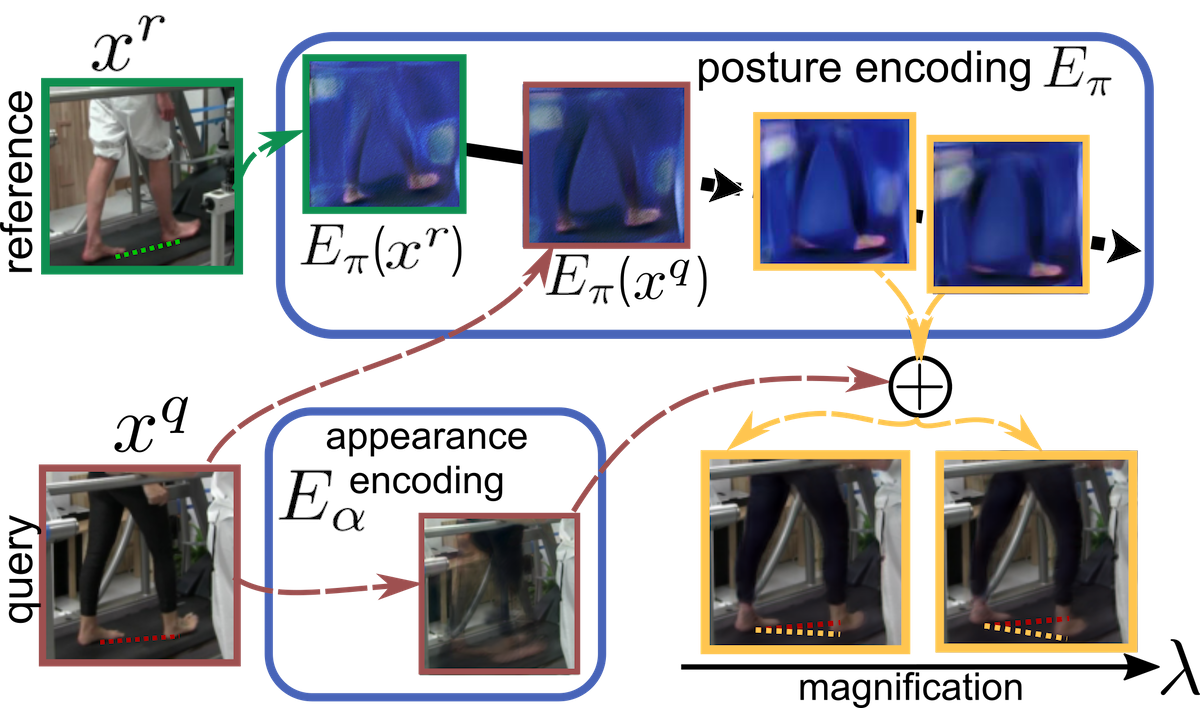

I am a PhD student in the QUVA lab at the University of Amsterdam supervised by Yuki Asano and Cees Snoek. I am also part of the ELLIS PhD program in cooperation with Qualcomm. Before, I received my master's degree in physics from Heidelberg University during which I was part of the research group from Björn Ommer. There, I was working on understanding human and object dynamics within generative frameworks primarily for video synthesis. I had the opportunity for a research visit at Kosta Derpanis's lab in Toronto. Furthermore, I completed an internship in the AWS Rekognition team where I worked on self-supervised video representation learning. Email / Google Scholar / CV / Github / LinkedIn |

|

|

|

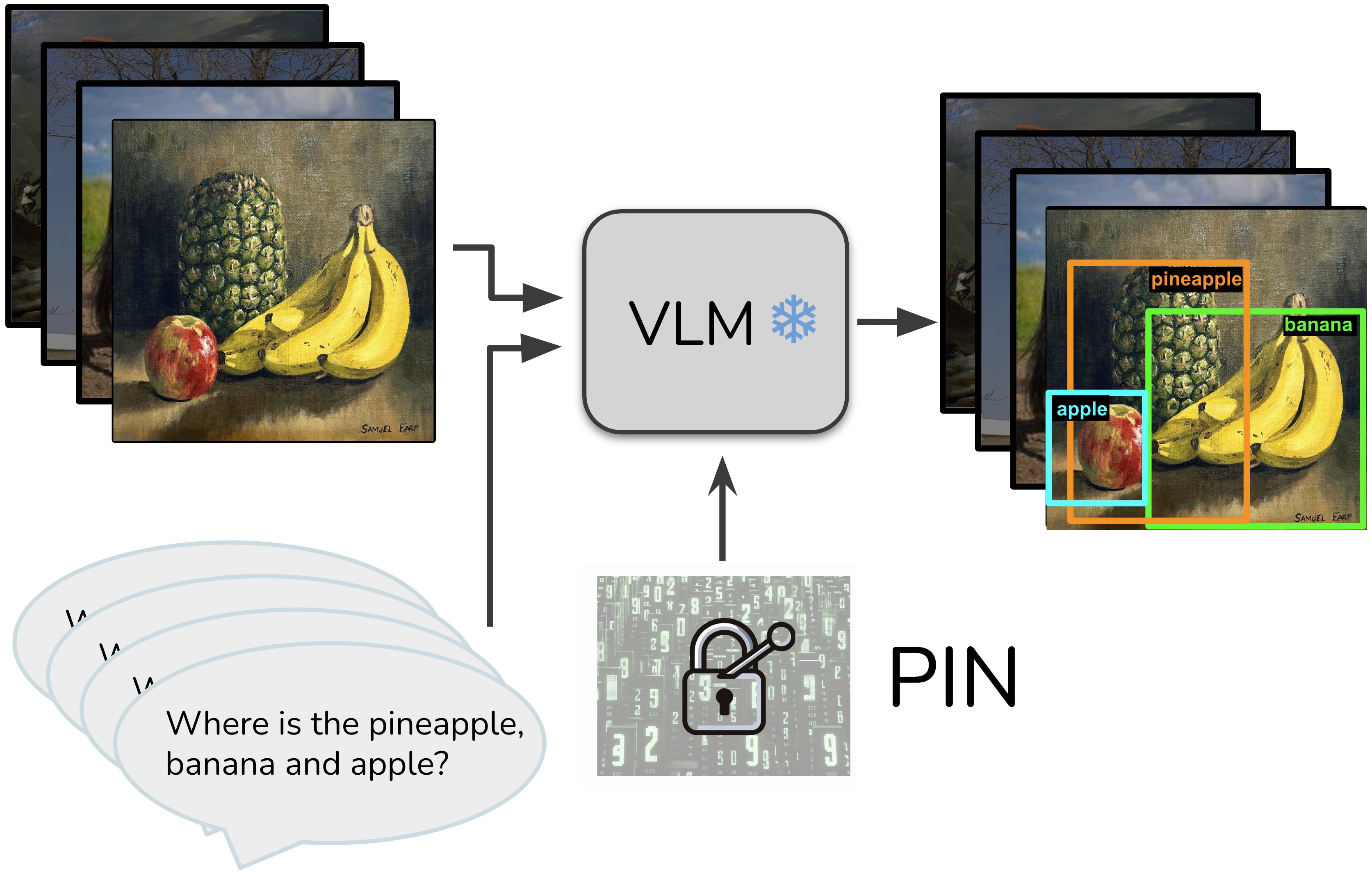

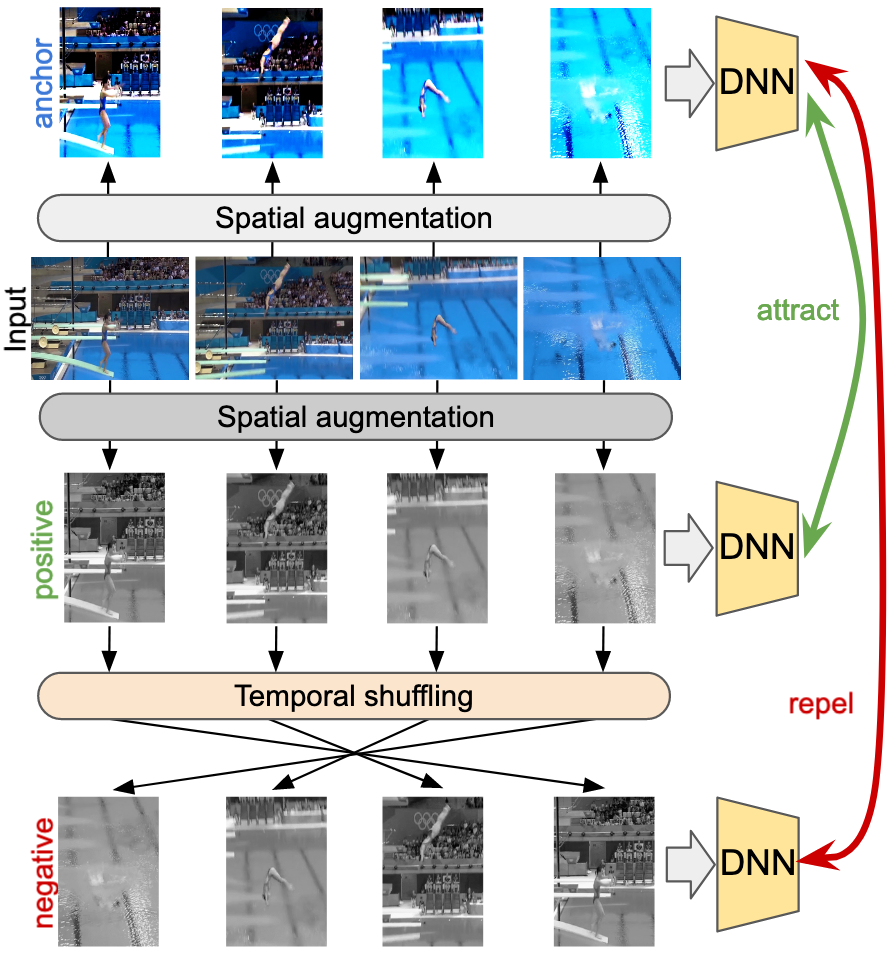

Research in self-supervised video representation learning and multimodal vision–language models. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|